As 5G networks are just beginning to roll out around the world, industry leaders are already looking ahead to the next generation of wireless technology: 6G. Although 6G is still in the early stages of development, it’s already generating a lot of buzz in the tech industry. In this article, we’ll explore the potential of 6G technology and its implications for US companies.

What is 6G?

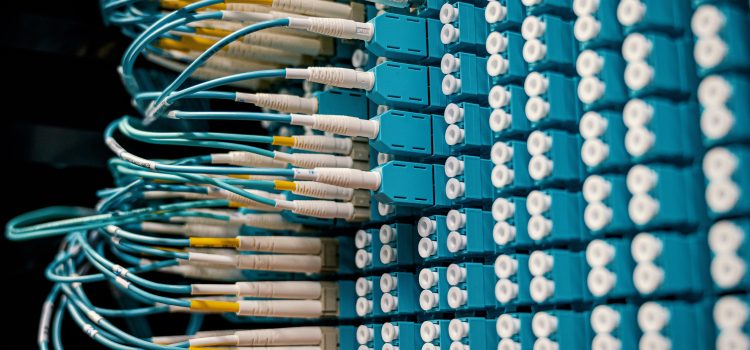

6G refers to the sixth generation of wireless technology, which is expected to succeed 5G in the coming years. While 5G promises faster speeds, lower latency, and more reliable connections than previous generations of wireless technology, 6G is expected to take things even further. Some experts predict that 6G could offer data speeds up to 100 times faster than 5G and latency as low as one microsecond.

Of course, 6G is still largely theoretical at this point, and much of what we know about it is based on speculation and projections. Nevertheless, industry leaders are already starting to explore the possibilities of 6G and how it could be used to transform industries ranging from healthcare to transportation.

Potential applications of 6G

So, what are some of the potential applications of 6G technology? One possibility is that it could be used to power the next generation of virtual and augmented reality applications. With faster speeds and lower latency, 6G could make it possible to create truly immersive virtual environments that respond in real-time to users’ movements and actions.

Another potential application is in the field of autonomous vehicles. As self-driving cars become more commonplace, they’ll need to be able to communicate with one another and with infrastructure in real-time. 6G could make this possible, enabling autonomous vehicles to make split-second decisions based on up-to-date information.

6G could also have significant implications for the healthcare industry. With faster speeds and lower latency, it could be used to power advanced telemedicine applications, allowing doctors to remotely diagnose and treat patients with greater accuracy and efficiency.

Implications for US companies

Of course, it’s still too early to say exactly what 6G will look like or which companies will dominate the market. Nevertheless, US companies are already starting to position themselves for the 6G era. Major players such as Qualcomm, Intel, and IBM are investing heavily in research and development to stay ahead of the curve.

However, there are also concerns that the US could fall behind in the race to develop 6G technology. China, in particular, has been investing heavily in next-generation wireless technology, and some experts believe that it could emerge as a leader in the 6G space. As a result, US companies will need to remain vigilant and continue to invest in research and development if they hope to stay competitive in the years to come.

Conclusion

Although 6G technology is still in the early stages of development, it’s already generating excitement in the tech industry. With its potential to transform industries ranging from healthcare to transportation, it’s clear that 6G could be a game-changer. US companies will need to stay on the cutting edge of research and development if they hope to capitalize on the opportunities presented by this emerging technology.