Introduction

Within the domain of language understanding (LU), ChatGPT has risen as one of the widely used chatbot solutions. This has enthralled users globally by its AI-powered text generation abilities. Created by Google, ChatGPT serves as an advanced linguistic model capable of producing logical and relevant in context replies to diverse dialogue inputs. This has been trained with a large quantity of textual information and employs sophisticated methods including deep neural models to comprehend and produce responses similar to humans. This piece explores the importance of knowledge transfer and tweaking in improving the conversational capabilities of ChatGPT. Additionally, it investigates the influence within the realm of socializing robots.

Getting a Handle on Transfer Learning and Adjusting in Natural Language Processing

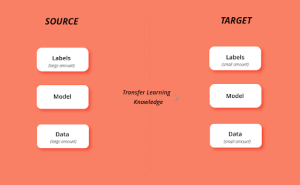

Knowledge transfer and adjusting play a crucial role utilized in language processing to improve the effectiveness of preexisting language models. These methods enable the models to utilize knowledge acquired from a vast dataset within a single task and implement it in another task, increasing the model’s aptitude to comprehend and generate speech. When using transfer learning, a previously trained language model is used for a fresh task or domain. It exploits the data represented in the pre-established weights of the model. Adjusting, however, requires training the weights of the pre-trained model on a designated task or dataset to enhance its performance for that particular objective.

These methods have shown to be indispensable in enhancing text analysis models’ performance. Particularly with the emergence of massive pre-trained linguistic models such as ChatGPT, that can be optimized using particular datasets to accomplish outstanding chatting proficiency.

The Influence affecting ChatGPT’s Dialogue Performance

Before knowledge transfer and adjusting, ChatGPT could produce illogical replies without smoothness or not effectively interact users efficiently. Nevertheless, following knowledge transfer and refining, ChatGPT can generate improved coherence and engagement replies for users. Nevertheless, adjusting the pre-trained architecture for specific projects or fields can greatly improve its interactive capability.

Coherence: Knowledge transfer assists Chatbot GPT comprehend the situation in discussions and generate more cohesive replies. Adjusting allows the model to comprehend the intricacies of certain sectors. These findings for increased pertinent and unified replies. As an illustration, adjusting ChatGPT using a dataset of medical chatbots can assist it in understanding healthcare terminology, symptoms, and interventions in a more accurate manner. This causes to superior user engagements.

Fluency: Knowledge transfer permits GPT Chat to generate articulate replies. This process this through utilizing language expertise contained in the pretrained model. Adjusting enhances smoothness by teaching the model based on task-specific information. Consequently, The answers from ChatGPT gain fluency and authentic.

Engagement: Knowledge transfer and adjusting benefit the involvement of users with ChatGPT. This model is able to comprehend the context of the conversation and offer customized replies, causing enhanced user participation. For example, adjusting a collection of customer testimonials and comments supports ChatGPT in responding to users in a compassionate and understanding way. This boosts patient fulfillment in healthcare environments.

Relevance of Knowledge Transfer and Refinement

Knowledge transfer and adjusting have introduced major breakthroughs in the field of NLP. Especially with big trained language algorithms including ChatGPT. These methods are necessary for maximizing usage of digital resources. These allow the utilization of pre-trained AI models for specific projects. Furthermore, they result in enhanced model effectiveness, specifically when field-specific knowledge is critical.

The benefits of transfer learning and fine-tuning include:

Efficient use of computational resources: Training extensive language models needs substantial computational capabilities. Nonetheless, knowledge transfer enables us to construct specialized models more optimally by employing once more pre-existing weights.

Improved performance: Ready-made models feature vast linguistic knowledge. These offer a great beginning point for particular techniques. Adjusting the models with specific data sets increases effectiveness for future assignments.

Specialized knowledge is vital to optimize models that have been trained before. Through training the models using datasets specific to the domain, they can accurately capture the subtle details and complexities of certain areas. For example, in the medical field, adjusting medical text has the potential to enhance how the model performs for assisting clinical decisions or chatbots used in healthcare.

Comparison to Other Techniques

Although transfer learning and adjusting are robust approaches to elevate ChatGPT’s conversing capability, alternative techniques are also present. Every technique holds its own benefits and drawbacks. A few of these methods incorporate data augmentation, gradual learning, and concurrent learning.

Data Augmentation: Increasing current learning data utilizing fake demonstrations can support enhance model abstraction.

Curriculum Learning: Systematically increasing the level of complexity in the training set while training the model can strengthen the learning aptitude.

Multi-Task Learning: Model Training Process for simultaneous execution of multiple related tasks has the potential to enhance overall performance.

Conclusion

Knowledge transfer and adjustment have played a key role in enhancing ChatGPT’s ability to converse. These individuals have pushed forward the domain in the field of NLP. Through utilizing existing knowledge and modifying models for particular tasks or domains, ChatGPT can create responses that are more logical, smooth, and captivating for users of the system. This permits to have a customized and individualized communication process. Considering NLP keeps advancing, such methods will continue to be essential for creating advanced and robust communication models. These will create fresh opportunities involving collaboration between humans and robots, robotics for social interaction, and numerous other practical uses.