Introduction

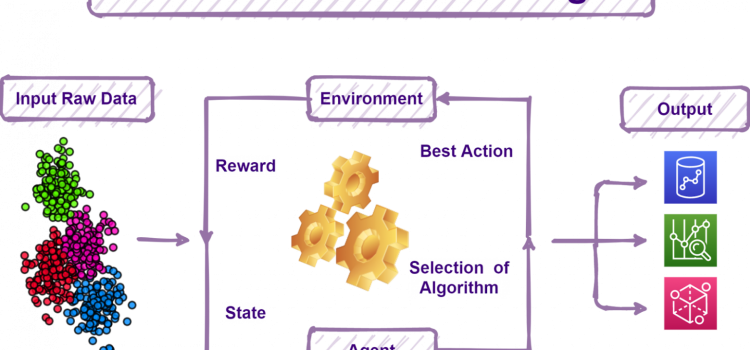

Agents have been successful in complex tasks with reinforcement learning across numerous environments. But as challenges and environment complexities increase, classic reinforcement learning methods might struggle to find optimal solutions quickly. HRL, a multifaceted tool, is applied to solve complex tasks in various domains by breaking them down into simpler sub-problems.

A look at HRL, combining Q-Learning and hierarchical policies, is presented in this article. In this four-room setting, understanding HRL and building our own Option-Critic framework can yield valuable insights into its advantages and uses.

Understanding Hierarchical Reinforcement Learning

HRL takes a cue from how humans break down complex tasks into smaller subtasks. We break down complex processes into smaller modules in coding just like HRL simplifies and streamlines long-term infrastructure plans for cities. Higher-ranking policy levels set overarching objectives while lower-ranking policy levels look after finer details.

Consider an example where an agent has to arrange and set a dining table. To grasp and position dishes adeptly is the agent’s goal. To precisely maneuver the limbs and fingers, it involves working on a more intricate level. Learning and decision-making become more efficient with HRL since the agent can gain useful policies across multiple levels.

The Options-Critic Framework: Architecture and Benefits

Among the well-known architectures for HR is the Option-Critic framework. In this framework, the agent’s decision-making process involves two main components: the Critic: This class is intended for managing various critiques in an organized manner The Option part contains the upper-level meta-policy (Q_Omega) and termination policy (Termination Policy), which determine the lower-level policies (Q_U). In contrast, the Critic part assesses the alternatives and offers feedback to the upper management.

The Options-Critic framework provides a range of advantages during training and exploration. Various cities’ annual rainfall levels are shown in this framework. Since higher-level policies have numerous environmental steps, shorter episodes lead to quicker reward propagation and improved learning. Extending the scope of the exploration helps the agent acquire more valuable insights and create better policies compared to limited actions.

Formulating policies for constructing at different levels.

The Options-Critic framework requires the establishment of the overarching meta-policy (Q_Omega), specific policies (Q_U), and termination policies. Q_Omega presents a 2D table containing Q-options for lower-level policies. Epsilon-greedy sampling is utilized to select the options based on the number of cities noptions.

The SoftMax policy Q_U is used where actions are sampled from the decision output of the SoftMax layer. The table is an essential part in following orders based on higher-ranking policy. The termination policy specifies the switch to another option when it is required for various levels of policies.

The Role of the Critic: Evaluating Options

The Judgment component within the Options-Critic framework appraises the options generated by the meta-policy. To assess the value of an option, this feedback provides useful insights by evaluating how good it is. The Critic is an extension of the Actor-Critic framework, where Q_Omega and Q_U are part of the Option part, and Q_U’s value is influential in the Critic.

A hierarchical Reinforcement Learning Agent is being trained and tested.

2D four-room environment is portrayed in this section to showcase agent training and testing. We develop a notebook in Colab to train and test our project. While training the Q_Omega policy, options and termination policies are established, which are later utilized in testing to steer the lower-level policies’ actions. Having goals altered after 1000 episodes has significantly enhanced agent performance.

Real-World Applications

1. Robotics

Robots often operate in unpredictable environments. HRL with Options-Critic allows them to adapt quickly and handle complex tasks like object manipulation, walking, or opening doors.

2. Autonomous Vehicles

Self-driving cars must make decisions like “stay in lane,” “switch lanes,” or “stop at light.” These can be learned as options, improving safety and reliability.

3. Video Game AI

Games like StarCraft or Dota 2 involve long strategies. Using the Options-Critic Framework helps game AIs plan multi-stage moves, much like real human players.

4. Smart Assistants

Digital assistants like Siri or Alexa need to understand context—whether to play music, answer a question, or set a reminder. HRL allows these systems to organize behavior efficiently and respond better to user needs.

Limitations and Challenges

While powerful, the Options-Critic Framework has some challenges:

- Computational cost: More complex models need more training time.

- Exploration: It may still miss better options if exploration is poor.

- Stability: Learning termination functions and option policies at the same time can make training unstable.

Ongoing research is working to improve these areas by combining HRL with other methods like curiosity-driven exploration and meta-learning.

The Future of Hierarchical Learning in AI

AI systems are moving beyond reactive behavior. They’re becoming more thoughtful, making decisions with planning, memory, and hierarchical structure.

The Options-Critic Framework is one step closer to general intelligence—where machines can tackle any task using learned strategies, adapt to new environments, and think ahead.

As researchers improve training techniques, we’ll likely see HRL used in more real-world products, from home robots to healthcare systems and advanced decision-making software.

Conclusion

The Options-Critic Framework is a breakthrough in hierarchical reinforcement learning, offering a smarter, more structured way for AI agents to learn. By breaking tasks into smaller strategies (options) and letting agents choose when to use them, this model improves learning speed, efficiency, and adaptability. While there are still hurdles like computational demands and training stability, the potential for real-world impact is enormous. As AI systems evolve, the Options-Critic approach is set to play a key role in making them more capable, intelligent, and ready for complex challenges.